How to Hack AI Agents and Applications

I often get asked how to hack AI applications. There hadn’t been a...

Evergreen essays or posts that were particularly well received.

I often get asked how to hack AI applications. There hadn’t been a...

Heads‑up: The concept of this post might seem trivial, but it can ...

Over 10 years ago, I put together a self “liturgy” of sorts (basically just a prayer) that I love reading. It takes a bunch of my favorite verses but changes them to the first-person perspective. There’s something about first person that makes it much more powerful and personal. As you read this, I pray it encourages you greatly.

Over 10 years ago, I put together a self “liturgy” of sorts (basically just a prayer) that I love reading. It takes a bunch of my favorite verses but changes them to the first-person perspective. There’s something about first person that makes it much more powerful and personal. As you read this, I pray it encourages you greatly.

OKAY. OKAY. OKAY. It can be a vulnerability. But it’s almost never the root cause.

OKAY. OKAY. OKAY. It can be a vulnerability. But it’s almost never the root cause.

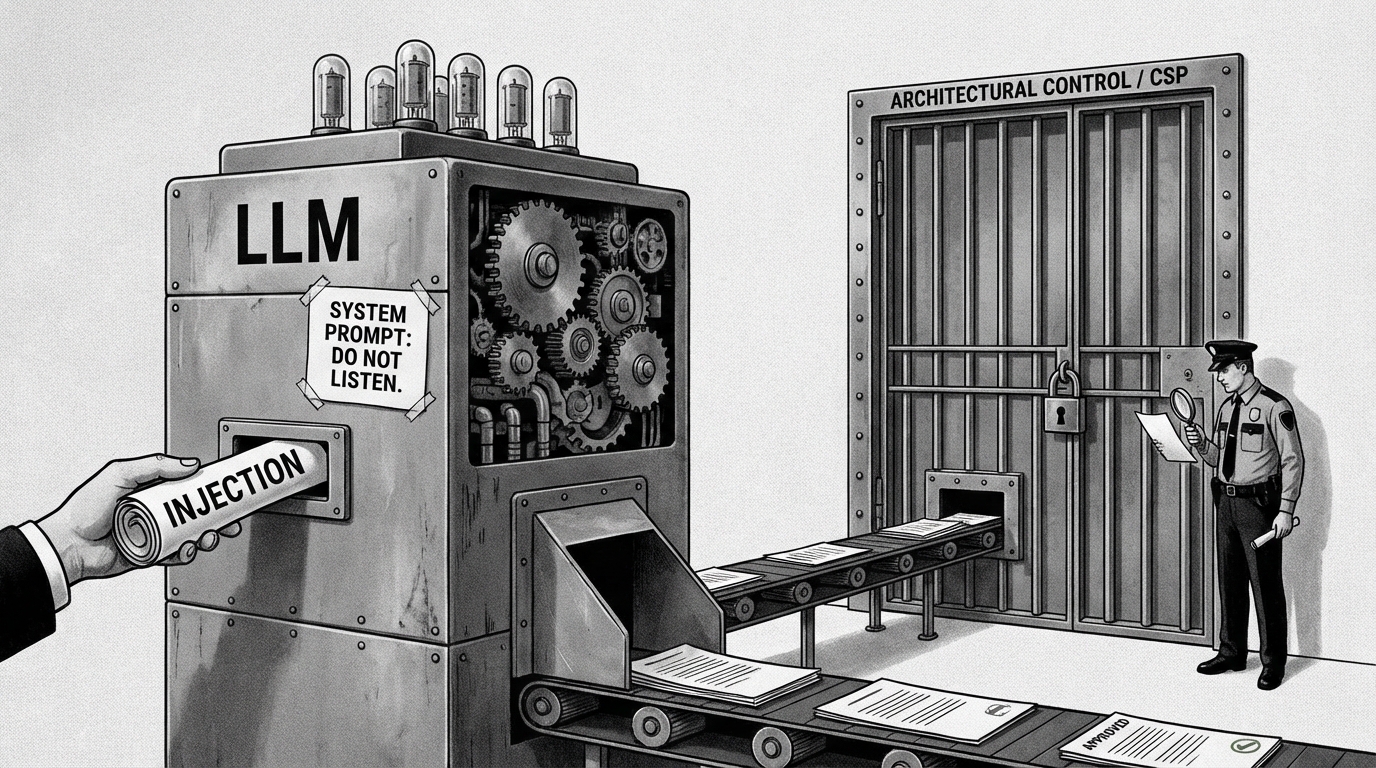

When exploiting AI applications, I find myself using this technique really often so I figured I’d write a quick blog about it. I call it the “Metanarrative Prompt Injection.” You might have already used this before, and it might already have another name. It’s basically like breaking the fourth wall, so to speak, by directly addressing the top level AI or a specific processing step in a way that influences its behavior. And it’s pretty effective.

When exploiting AI applications, I find myself using this technique really often so I figured I’d write a quick blog about it. I call it the “Metanarrative Prompt Injection.” You might have already used this before, and it might already have another name. It’s basically like breaking the fourth wall, so to speak, by directly addressing the top level AI or a specific processing step in a way that influences its behavior. And it’s pretty effective.

There’s an AI Security and Safety concept that I’m calling “AI Comprehension Gaps.” It’s a bit of a mouthful, but it’s an important concept. It’s when there’s a mismatch between what a user knows or sees and what an AI model understands from the same context. This information gap can lead to some pretty significant security issues.

There’s an AI Security and Safety concept that I’m calling “AI Comprehension Gaps.” It’s a bit of a mouthful, but it’s an important concept. It’s when there’s a mismatch between what a user knows or sees and what an AI model understands from the same context. This information gap can lead to some pretty significant security issues.

In bug bounty hunting, having a short domain for XSS payloads can be the difference in exploiting a bug or not… and it’s just really cool to have a nice domain for payloads, LOL.

In bug bounty hunting, having a short domain for XSS payloads can be the difference in exploiting a bug or not… and it’s just really cool to have a nice domain for payloads, LOL.